AI in Cybersecurity: Fighting Scams with AI and Overcoming Data Poisoning

In recent times, the use of artificial intelligence (AI) by cyber criminals to create convincing phishing sites, deepfakes, and fraudulent content has made it even more challenging for defenders to protect individuals and organisations.

However, AI is not only a tool for attackers—it is also a powerful weapon for defenders. In this article, we will explore how AI is used to combat the evolving threat of scams, focusing on GovTech’s Anti-Scam Products (GASP) team and their innovative efforts in Singapore. From the Scam Analytics and Tactical Intervention System (SATIS) to overcoming data poisoning challenges, this article delves into the strategies and tools shaping the future of scam prevention and cybersecurity:

-

The Evolution of Scams with AI

-

Fighting Scams in Singapore with GovTech

-

Using AI to Fight Scams in Singapore

-

Overcoming Data Poisoning by Malicious Actors

The Evolution of Scams with AI

Scammers are leveraging emerging technologies like AI to enhance their malicious tactics. Hence, the need for robust cybersecurity measures has never been more urgent.

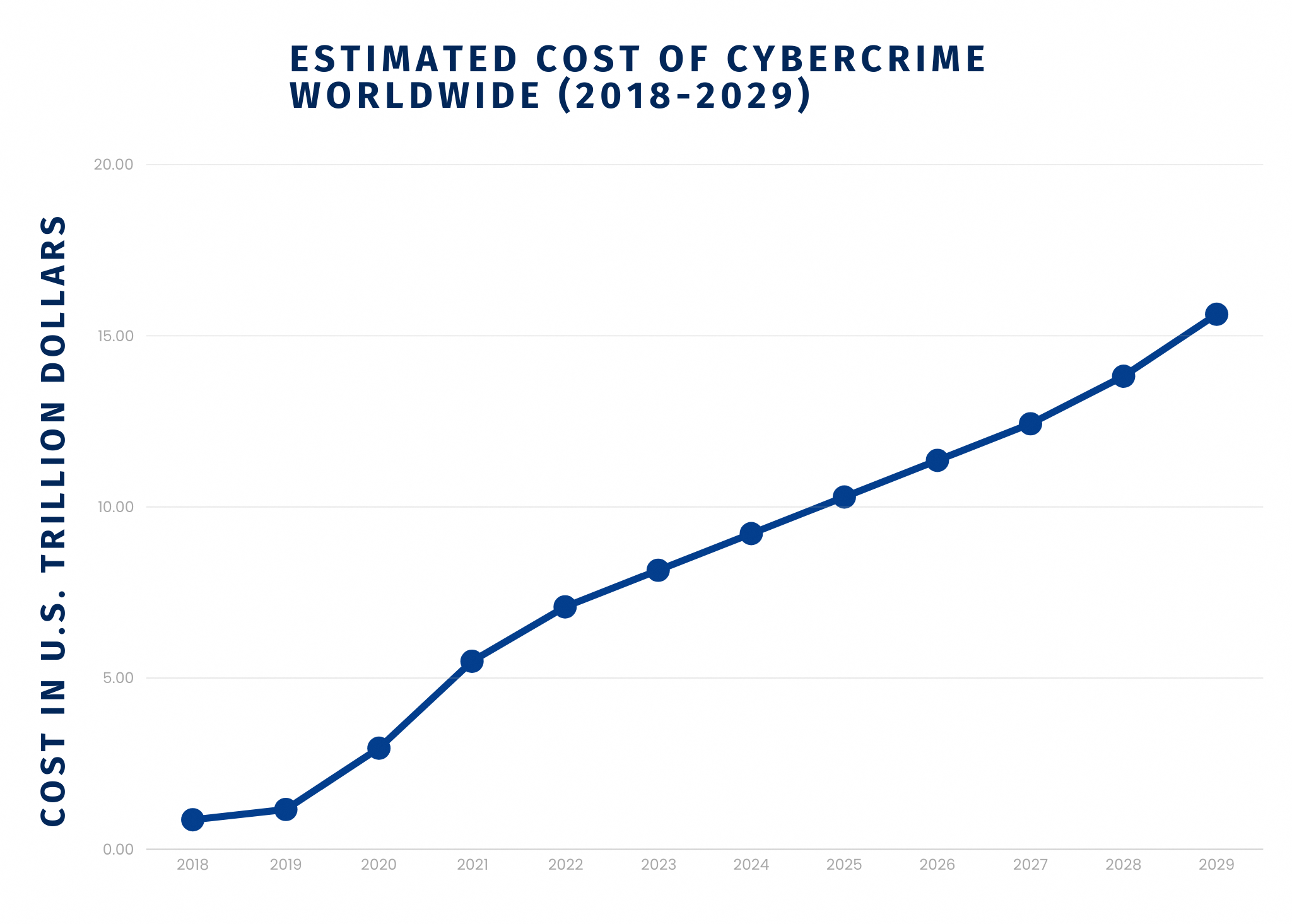

According to a Business Times article published earlier this year, scam victims in Singapore lost around S$650 million to scams in 2023. Reported scam cases also hit a record high of 46,563 last year. From a more global perspective, a report from Statistica indicates that from 2024 to 2029, the estimated cost of cybercrime worldwide is projected to increase by $6.4 trillion U.S. dollars.

Statistics taken from Statistica, published 30th July 2024.

With alarming numbers like these, it’s no surprise that cybersecurity and scam prevention are growing concerns for the Singapore government and Singaporeans of all ages.

Increasing ease of access to new technology has also contributed to the growing frequency of scams and online harms. AI is one such form of technology that is growing in influence due to its rapidly expanding versatility in applications. Google’s CEO, Sundar Pichai, shares similar views about the increasing significance of AI in a blogpost kick-starting Google’s 25th birthday celebrations last year:

“Over time, AI will be the biggest technological shift we see in our lifetimes. It’s bigger than the shift from desktop computing to mobile, and it may be bigger than the internet itself. It’s a fundamental rewiring of technology and an incredible accelerant of human ingenuity.” - Sundar Pichai, CEO of Google

This statement from Google’s Chief Executive only reinforces what we already know: that AI is here to stay and will continue to change the way we live our lives. Despite the endless possibilities of AI for good, we have already seen how it is used for the wrong purposes—the enhancement of scam messages and calls, malware, phishing emails, deepfake videos, etc.

Nonetheless, there are signs that not all hope is lost, and AI can indeed be used for public good, like ensuring a safer digital cyberspace for online users.

AI Cybersecurity System Deployed at the Paris Olympic Games 2024

A positive case study example is demonstrated at the Paris Olympic Games 2024. For the first time, the International Olympic Committee (IOC) used a new AI-powered monitoring service to protect athletes and officials from online abuse at both the Paris Olympic and Paralympic Games 2024.

The AI-powered tool monitored thousands of social media accounts in real-time across 35+ languages. Developed by the IOC Athletes’ Commission and the Medical and Scientific Commission, this system flags abusive messages for quick action by cybersecurity personnel, often before athletes see them.

Covering close to 15,000 athletes and 2,000 officials, the AI-driven system is part of a broader safeguarding initiative to protect athletes' physical and mental well-being. This allows them to focus fully on competing at the games and reduces the likelihood of being affected by abusive comments on social media platforms.

Fighting Scams in Singapore with GovTech’s Anti-Scam Products (GASP) Team

Closer to home, the GASP team was formed with the mission of building tech products to detect, deter, and disrupt scammers at scale. We sat down with Mr Mark Chen, Principal Product Manager at GASP, and Mr Andre Ng, Assistant Director at GASP, to find out more about how the GASP team uses AI to protect Singaporeans from online scams.

Mr Mark Chen (left) and Mr Andre Ng from GovTech’s Anti-Scam Products (GASP) team.

Protecting Singapore Residents from the Shadows with SATIS

With the increasing number of malicious websites designed to steal users’ login credentials and banking details, the GASP team developed the Scam Analytics and Tactical Intervention System (SATIS) in collaboration with the Ministry of Home Affairs (MHA). This tool actively consolidates threat intelligence, analyses, and disrupts any malicious websites. These include job scam sites or bank phishing sites that mimic the look and feel of the real websites of legitimate organisations.

SATIS is powered by the recursive Machine-Learning Site Evaluator (rMSE)—an in-house Machine Learning (ML) technology developed by GovTech. It works in the background and around the clock, analysing over hundreds of thousands of URLs daily to identify potentially malicious sites. As of September 2024, SATIS has successfully identified and disrupted over 50,000 scam-related websites.

“With SATIS and rMSE, the police can now proactively hunt and take down malicious websites at scale before anyone falls prey. This would not be humanly possible without AI technology.” - Andre Ng

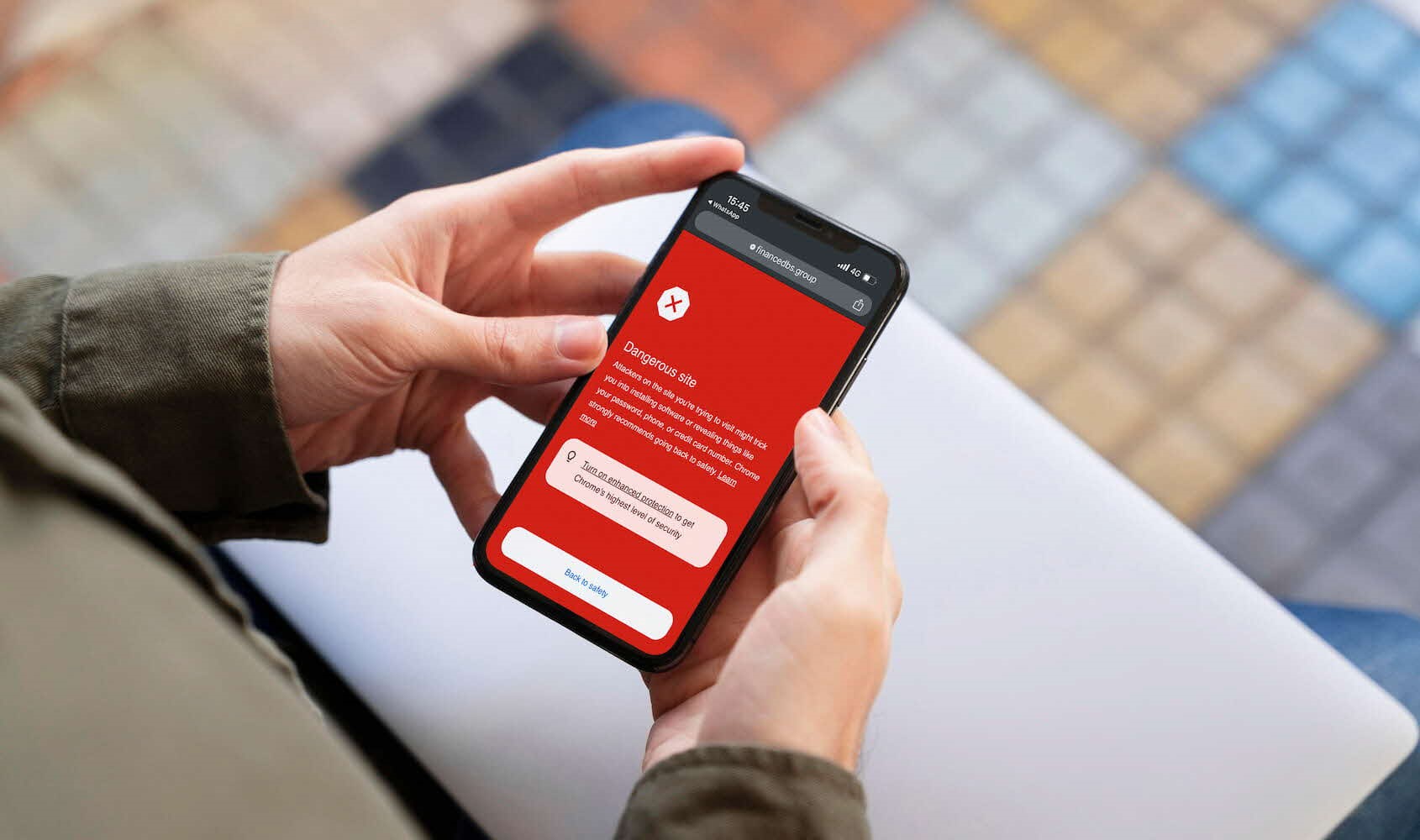

If you happen to land on a SATIS-disrupted scam website, you’ll see one of the following:

-

A red warning screen notification

-

Warning from SPF

-

Error notification (e.g. error 404 message)

A red warning screen notification issued due to SATIS’ disruption and identification of a potentially malicious website.

How Does GASP Use AI to Fight Scams in Singapore?

In the early days (i.e. 1-2 years ago), finding scam sites often involved manual operators looking for anomalies vs. static rules—known threats and behaviours that attackers commonly use when setting up scam sites. However, this process has since evolved with the use of ML to identify unknown patterns and anomalous behaviours at a rate that is not humanly possible.

How does this work?

This is where the power of AI comes in. With AI, vast amounts of data can be analysed in a short time frame. AI can then quickly draw logical conclusions on what is considered anomalous behaviour from the pool of data studied and subsequently perform routine security functions implemented by the GASP team. Thus, the ML technology behind rMSE for instance, is continuously improving on its own with more data being fed into it. Over time, AI will learn and adapt to new trends of attacking behaviours and afterwards suggest safe code fixes to counter the attackers.

This is possible because AI can meticulously scan millions of lines of code rapidly, scrutinising each part to determine if any line can be used to breach systems. However, scammers can still introduce vulnerabilities into AI defence systems by poisoning the data sets used to train AI cybersecurity products.

This phenomenon is known as data poisoning.

Overcoming Data Poisoning by striking the Balance between High-Quality Data and Quantity of Data

When asked if the GASP team faces issues of data poisoning, Andre states that the problem definitely exists. However, the GASP team carefully validates the dataset used to train ML models in their products to reduce the risk of data poisoning.

Taking rMSE as an example, part of the data collected comes from police cases on scam-related issues. Since police reports require police officers to officially close a case, the data collected from SPF is considered to be high-quality because it has been carefully verified by humans.

Additionally, the GASP team utilises the ScamShield database of scam reports from Singaporeans to further enhance the quality of the training dataset.

However, despite the carefully validated datasets, Mark states:

“In the scams or adversarial space, the more data you have, the better. However, the quality of data is also an important factor. So, you always have to strike a balance between large training datasets and carefully validated datasets.”

Another method the GASP team uses to validate datasets is to wait for weeks or months before deciding if a website is safe or malicious.

Why is this necessary you might wonder? Mark explains:

“One good way to overcome data poisoning is to be patient. Scammers tend to resort to something called strategic squatting where they will register a domain online, put some benign or safe content on it, and only turn the site malicious after a period of time. So sometimes there is a need to be patient in our analysis and judgement.” - Mark Chen

Artificial Intelligence: A Double-Edged Sword

As technology advances, so do scammers’ tactics, making it clear that traditional cybersecurity methods alone are no longer sufficient. The rise of AI has introduced both new challenges and solutions to the fight against scams. GovTech’s GASP team has shown how AI can be harnessed to disrupt and prevent malicious activities, significantly improving scam detection and response in Singapore. However, the battle doesn’t stop there. Defenders must evolve AI-driven solutions to outsmart and outpace attackers as malicious actors adapt.

While the road ahead is complex, integrating AI into cybersecurity provides a beacon of hope in the ongoing fight to protect users from online scams and data poisoning.

Note: This is the 1st article of a two-part article series. Check out the 2nd article below: